From the prompt, the question that stood out to me the most was: “What do [neural networks] offer as media for our internal life?” Currently, my relationship for Large Language Models is one of pure function and text, not media or art. I ask Claude to help with my code. The model combs through whatever it’s trained off of in tandem with what it scrapes from StackOverflow forums/other sources. I get a chunk of code that either works or requires further debugging. The metaphor I use to explain AI to my parents is one of a thresher or combine crop harvester. The machine combs over a field of things to extract, tries to separate the useful bits from what’s planted, beating away the chaff (most time spent with AI here) with limited concern for the environment. The output of an LLM will be only as good as the input it’s working off of. Except our information is the field it harvests, extracting from all of us the relevant bits for whomever it’s responding to. I have never considered seriously these harvesting neural network machines as approaching my internal life, but what are the gaps exactly?

Mark Reidl’s Medium article outlines LLMs logic of predicting and guessing very well. And from last week’s class discussion/reading of Donald Hoffman, our consciousness/interface/what have you is constantly guessing what reality is, then grading based on survival logic, and serving it up to our UI appropriately. With this framing, my mind and the AI might not be as far apart as I thought. However, I thought of music. Not of producing music (because models can do that pretty well) but of experiencing music. Listening and unpacking a song is one of the richest emotional and engaging experiences I feel on a daily basis. Through music, I relate to other human beings in ways that just aren’t possible otherwise. Something sublime is communicated in the texture of the sound waves, mathematical arrangement of frequencies, and color of tones that just isn’t possible in other mediums. Can a LLM listen to a song with me and have the same experience alongside me?

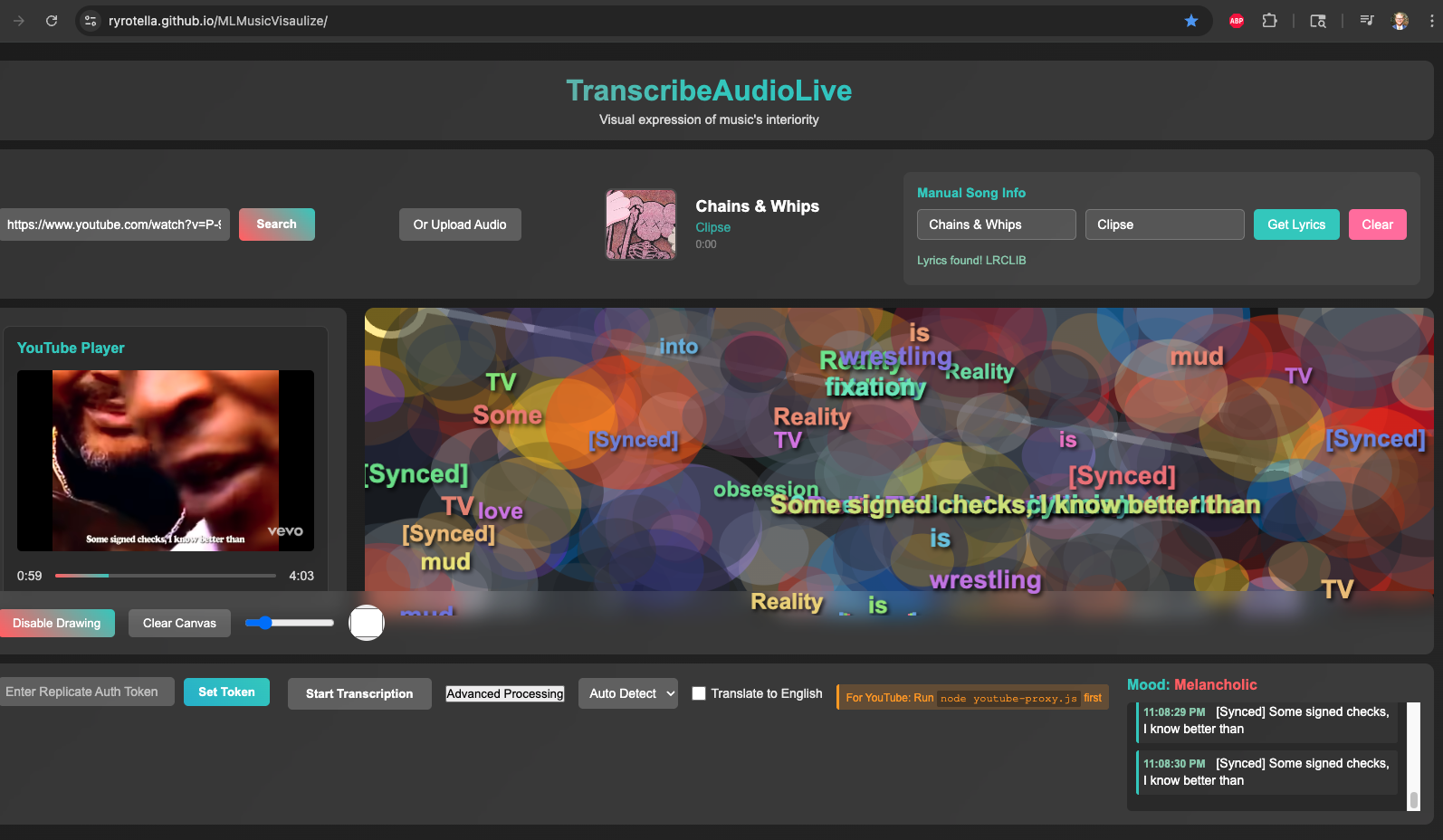

This is what motivated my coding experiment for this week. I have an experience where people input a song, either as Youtube URL (which audio can be easily extracted from their API; I recommend this approach) or an uploaded file. Then, you can look up the song’s lyrics by combing a lyric database. The code then syncs the lyrics written on the canvas with when the lyrics are sung/uttered. I wanted the Whisper model to transcribe this live but I (and ClaudeCode) found this workaround with the lyrics to be better. Isolating vocals from the rest of the music is something humans are very good at, and ML might be as well but it’s much harder to set up for them (at least when I’m doing it).

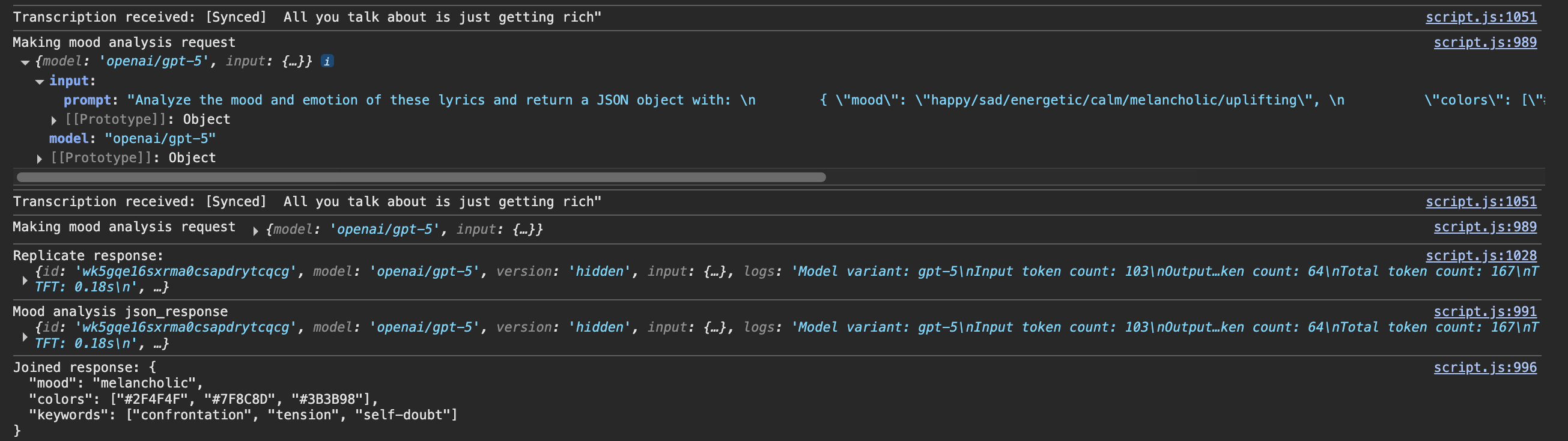

Then, the lyrics get sent to GPT-5 for it to analyze the mood, and it returns a response on its mood, the associated colors with the mood, and keywords from the analysis. The code then paints the canvas with these color as soon as the request gets processed. The model is painting the scene of the song as I am also listening to it, painting it in my own mind.

I also give the user the option to paint alongside the model as the song is playing and/or clear the canvas as needed.

Predictably, the result was a colorful mess, not too dissimilar from my thought aquarium.

To conclude, the gap between what humans experience in their inner life is far from how LLMs see things. I anticipate it will always be this way, but who knows? ML models are very obedient to whomever owns them and sets their parameters. They will always be good at following tasks, but they have a hard time generating what we can without our input. Hopefully, we are not a mere resource to them, but their owners and the companies in charge certainly view us this way.

Leave a comment